During clinical trials of neurodegenerative diseases such as Parkinson’s disease and Alzheimer’s disease, patients have frequent face time with their clinician to conduct tests that monitor disease progression.

In addition to evaluating speech and facial movements, clinicians use the responses to detect how much a patient’s disease has progressed.

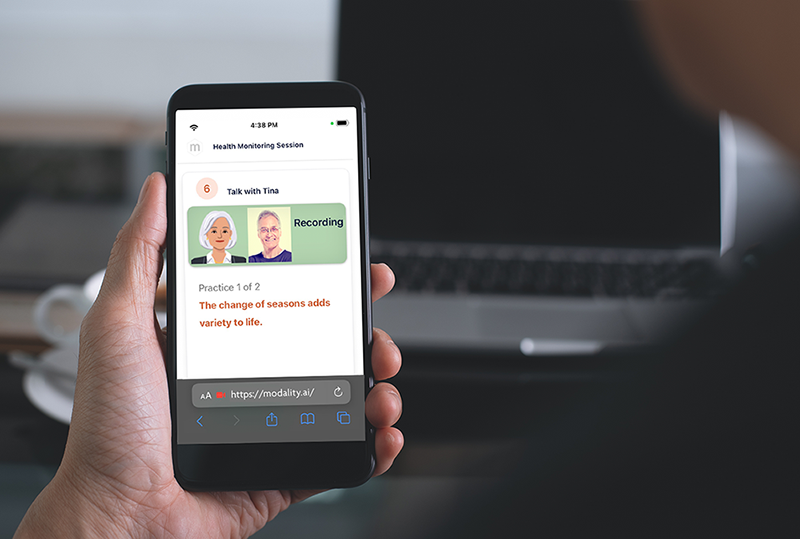

David Suendermann-Oeft, founder and CEO of San Francisco-based Modality.AI, saw a spot in which artificial intelligence (AI) could assist.

AI is taking over healthcare in several areas, especially in digital biomarkers, but this type of chatbot is a unique experience for this patient population. All a patient needs is a quiet space with a device which has both a camera and microphone.

Suendermann-Oeft, who appeared on a panel at SCOPE 2024, did not want to create a bot that performed the standardised tests, but said he understood the need for patients to be able to have a person they could talk to about their disease and symptoms. This is what led to the creation of Tina.

Modality.AI’s software is being used in patients with various neurological and neurodegenerative conditions including amyotrophic lateral sclerosis (ALS), Alzheimer’s disease, Parkinson’s disease and schizophrenia.

Tina leads patients through a variety of cognitive tests, dependent on their disease, as well as allowing patients to speak and share their thoughts. The technology is already in use by Johnson & Johnson but if the uptake increases, it could save investigators time evaluating patients.

This interview has been edited for length and clarity.

Abigail Beaney: Tell me about Tina, and what inspired you to create a service like this?

David Suendermann-Oeft: We want this technology to be as relatable as possible to the patient, so that they can build a relationship with Tina and have confidence in carrying out these regular assessments to provide as much evidence as possible to pharmaceutical companies and sponsors using the technology.

We found technical ways in which you could capture information for example an app where you capture 15 seconds of speech, and then you hold a camera in front of your face and do some facial exercises, but it is not very relatable, it's very technical.

We believe that including relatability can make it a much more pleasant experience. In fact, patients report that they prefer to speak to Tina over a physician or human because she is never judgemental and always patient. You can have as much time as you want expressing yourself and sharing your thoughts.

AB: The AI software picks up cues from facial movements and vocalisations. What cues does Tina pick up on?

DS: Tina picks up on anything that patients say and do. If you are a neurologist talking to your patient on Zoom, anything you would pick up from this interaction is what Tina can also see and hear. We worked with key opinion leaders and neurologists in specific disease areas to establish which questions and tasks Tina needed to conduct. These change dependent on the specific condition. For example Parkinson's disease, a movement disorder where patients communication is impaired - patients have tremors and their finger movements slow down, patients speak with a very low voice which can be hard to understand, facial movements slow down towards the end of the disease, and patients have a phase where they are unable to express emotion. All of these things can be measured in tasks that Tina provides.

AB: After Tina provides that assessment, does a clinician see the entire video or is this process also handled by AI?

DS: The clinician is an automatic clinician. The AI algorithm goes over the data and extracts over 400 facial landmarks per video frame, or landmarks of your joints and fingers. Back to the Parkinson’s example with the finger tapping test, a common test conducted by clinicians. Tina captures this test through the video feed and calculates the frequency and the amplitude of finger movements.

No clinician needs to review the video or the audio, but they can if they wish to review the accuracy and reproducibility. Videos are available in the dashboard so clinicians can go directly to the segments of the task they have interest in. The principle however is that this is an automated system.

AB: For patients that have multiple interactions, does ML help Tina learn more about each patient?

DS: There is a change in the experience that patients have but the system does not learn or adapt to their behaviour. Different stimulus materials are provided from test to test, for example, describing a picture is a typical task in cognitive assessments where clinicians measure the use of nouns, functional words, the frequency and length of pauses and reaction speeds. These pictures change from session to session, as does the sentence intelligibility probes but other things remain the same. This ensures it remains an interesting experience for patients from session to session. The reason we don't adapt to the specific patient is that in clinical trials, investigators are looking for standardised protocols that are identical across the population to remove any bias or variability in the data.

AB: How well have clinicians, key opinion leaders and patients responded to Tina?

DS: We have had excellent responses, specifically from patients who report having a very pleasant experience and that goes back to the question of why we did it this way. We wanted to make sure Tina is something that's relatable for the patient and that they can build a trusting relationship with the platform, so they feel comfortable opening up about their condition.

Not only do patients go through functional assessments but they are also able to describe their problems in their own words. Tina is really about building the trust because this way, you can identify what matters to patients and their experience with their disease.